The Hidden Risks of Overestimating AI's Power Needs

Everyone agrees AI will consume enormous amounts of electricity over the next decade. But what if we're all wrong?

Over the last year, one story has dominated the news cycle in the world of climate and energy: AI and its insatiable hunger for electricity. Every week in 2024, it seemed like there was a new eye-popping forecast.

Last month two separate groups of researchers released reports that would have been shocking if they had been released two or three years earlier. Both groups forecasted huge growth in electricity demand. But by the end of 2024, the general media and public reaction to these reports was a respectful shrug. They caught my attention though.

The first report was released by Lawrence Berkeley National Laboratory (LBNL). It stood out to me for a few reasons. First, one of the authors of the report was Jonathan Koomey, one of the most vocal skeptics of the mainstream AI power demand narrative. Secondly, it was published by a national lab known for rigorous, measured research. If there was going to be a conservative estimate for data center electricity demand growth this would be it.

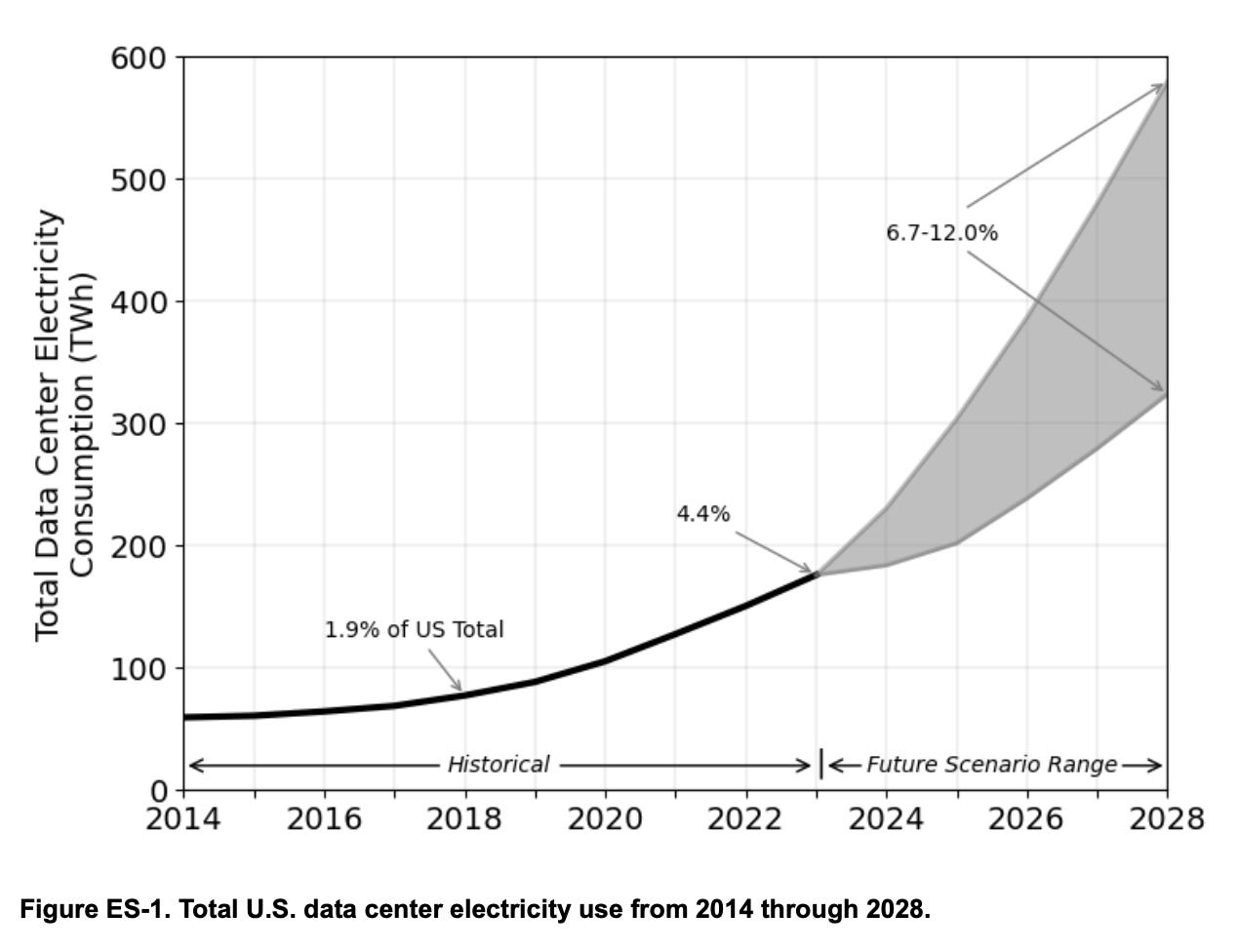

So what did these cautious researchers find? By 2028 data centers could consume between 6.7% and 12% of the entire country’s electricity. That’s 3 years from now.

The same month, the consulting group Grid Strategies released a report in which they found electricity demand could increase by about 16% by 2029.

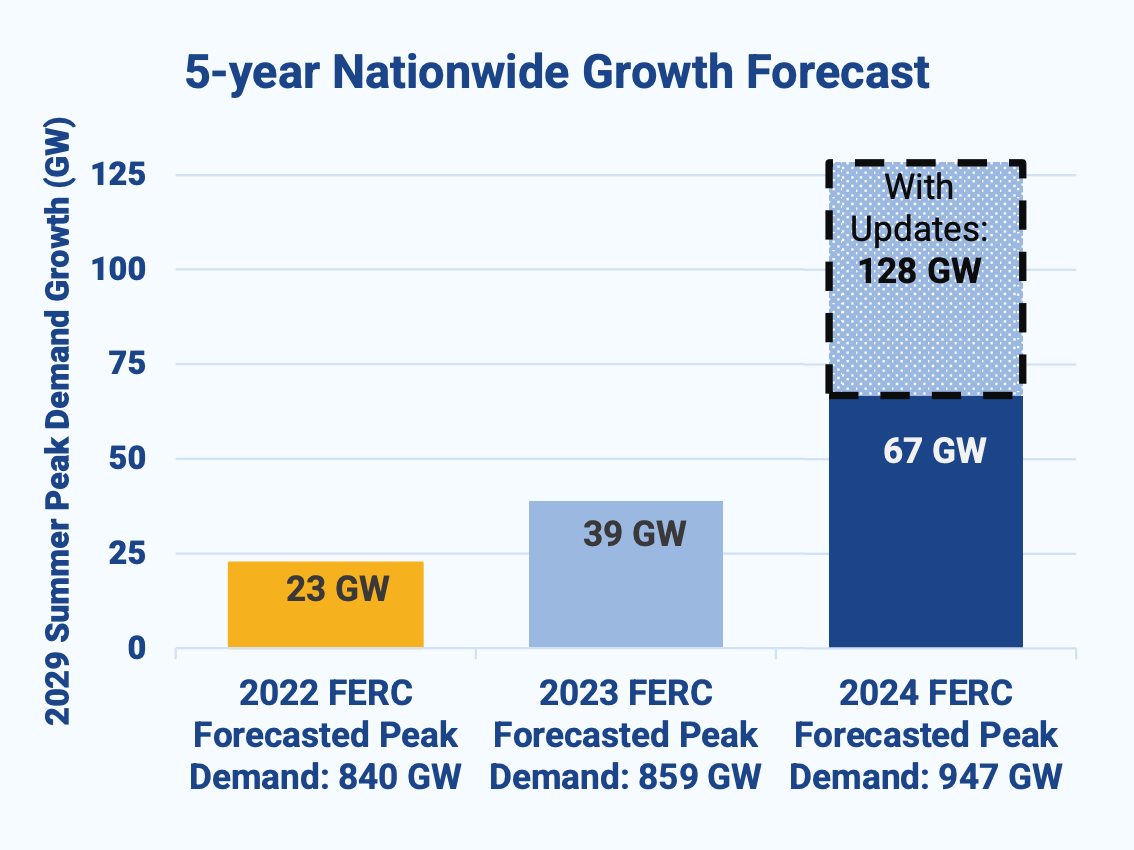

To get to that number, Grid Strategies tallied up “5-year load forecasts” from utilities across the country. These forecasts, unlike most that make headlines, have a certain weight to them in that they inform the infrastructure utilities build over a five year time period. They are what utilities expect and plan their power plants and transmission lines around. Whether or not that electricity demand—or load, to use the industry jargon—ever shows up, these forecasts affect what is or isn’t built.

The demand growth utilities are forecasting—that 16% by 2029—is massive compared to the recent past. In the 2010s, power demand in the US rose by 0.6% annually, according to Grid Strategies. That means this new era of high load growth would require, “six times the planning and construction of new generation and transmission capacity.”

Grid Strategies has been tracking these 5-year load forecasts for a couple years now. Their last report, released at the end of 2023, was one of the first to sound the alarm bells about rising electricity demand. It made headlines around the world when it found that utility power forecasts—the 5-year load growth forecast I mentioned earlier—rose by 170% between 2022 and 2023.

Utility growth forecasts grew by another 328% between the time of that report and the one that was released last month. With each year, these forecasts are growing larger.

An important caveat: These are just forecasts

At this point it’s nearly impossible to find a forecast for future data center electricity consumption that doesn’t anticipate huge growth over the next five years.

But there’s an important caveat that is almost never mentioned, let alone emphasized, in all the discourse about AI and the explosion in electricity demand: These are all just forecasts, predictions, informed guesses about the future.

I go back and forth between thinking AI is going to break the power grid and gobble up all the electrons in the world and a more skeptical position. It’s impossible to read the studies, forecasts, and corporate announcements about giga-scale data centers and not think there’s a good chance it will all materialize.

But the confidence most people make their predictions with is what I’m most skeptical about—mainly because humans are so bad at predicting the future.

Read more from Distilled

When the internet was supposed to use 50% of electricity

Long before the current AI power panic, there was another panic about a new technology with an insatiable thirst for electricity: the internet.

In 1999, Peter Huber and Mark Mills wrote an article for Forbes Magazine titled “Dig more coal -- the PCs are coming.” Reading that story today is eery in its similarities to the current moment. In the opening paragraph, the authors write, “Somewhere in America, a lump of coal is burned every time a book is ordered on-line.” They go on to cite statistics about the staggering growth of the internet: “Traffic on the Web has indeed been doubling every three months.” And then they make a prediction: “It’s now reasonable to project that half of the electric grid will be powering the digital-Internet economy within the next decade.”

Huber and Mills were, of course, right in their prediction about the ubiquity and importance of the internet. The technology changed nearly everything about our society, economy, and politics. They were correct in predicting that internet traffic, adoption, and infrastructure would explode. And yet, their forecast for how much electricity demand would ensue was fantastically wrong.

By 2009, a decade after the article was published, data centers powering the internet consumed a couple percent of the country’s electricity—nowhere near the 50% growth forecasted in the article.

So how did internet usage grow by many orders of magnitude while electricity demand remained flat? The short answer is data centers learned to use more with less. Semiconductor chips became more efficient at turning electricity into computing power. Data center operators learned to use less energy and water for cooling and ventilation. And companies shifted their computing from inefficient self-hosted servers to larger, more efficient data centers.

Meanwhile, the same trends were taking place in different forms across the entire US economy.

In 2007, energy modelers at the Energy Information Administration (EIA) forecasted that electricity demand would continue to grow much as it did in the decades prior. Their model predicted that the United States would consume 4,700 terawatt hours (TWh) by the year 2023.

But they were off by 838 TWh—more electricity than the United Kingdom and France consumed last year. Instead of growing, electricity demand flatlined for much of the next decade and a half.

Some of the decline in electricity demand growth was due to the Great Recession. Though, it’s worth noting even this major event was completely missed by forecasters.

In December 2007, The Wall Street Journal gathered a group of the country’s top economists and asked them to predict the likelihood the US would enter a recession in 2008. As Nate Silver writes in The Signal and the Noise, this expert group gave the odds a 38% chance. But the economy was already in the worst recession in modern history.

The Great Recession doesn’t explain all of the gap between the EIA’s forecast and what panned out though. Much of the decline in demand growth was due to improved energy efficiency across the economy, which was itself a result of trends that would have been hard to predict.

New technologies like LED lights became cheap leading to mass adoption and less electricity consumption. Americans elected and then re-elected a president in 2008 and 2012 that believed the government should encourage efficiency. Then that president passed laws forcing appliance manufacturers to make their products more efficient. States and local governments, finally waking up to the threat of climate change, passed their own policies—like new building codes—that encouraged or mandated energy efficiency.

Between 2006 and 2023, the world did what it has always done: It changed in completely unpredictable—and often mundane—ways.

Read more from Distilled

Railroads, fiber optic cables, and bubbles gone bust

At this point, few people I know doubt the long term promise and potential of AI. Even those who see today’s large-language models as plagiarism machines that spit out B-tier haikus can imagine a world in five, ten, maybe 20 years where AI creates immense value for society.

But the scenario where AI gobbles up all the electricity—the one where it breaks the electric grid—assumes that this technology, and the data centers that power it, grow consistently and exponentially.

Few technologies throughout history have grown so steadily though. More often growth happens inconsistently.

In the 19th century everyone who saw or rode on a train immediately understood the promise of railroads. It was a technology that could get you or your crop from Point A to Point B faster than a horse. Hundreds of companies raised money from investors and built tracks all over the world. Today railroad tracks crisscross most populated places in the world. But between that initial boom and railroads’ ubiquity, there was a bust. In the years that followed the collapse of the 1873 railroad bubble, few tracks were laid.

At the turn of the 20th century, another technology emerged whose long term value was obvious to many: fiber optic cables. As in the 19th century the technology attracted huge amounts of investment. It was obvious what faster internet connections could enable. In 2000, 20 million miles of optical fiber was laid in the US. Then the tech bubble crashed. By 2003, just 5 million miles of fiber were installed. Here, too, the growth was anything but steady.

AI needs to start making a lot of money

Will AI go the way of the railroads and fiber, or will it tread its own path? That’s what Sequoia investor David Cahn calls “AI’s $600B Question.”

Using data from Nvidia’s quarterly reports, Cahn estimated that big tech companies would need to earn $600 billion from AI per year in order to make a return on their huge investments in chips and data centers. If they can’t do that soon, the AI bubble will likely burst and we can all stop worrying about all these new data centers for a few years.

$600 billion per year is a lot of money even in a global economy as large as ours. Energy analyst Michael Liebreich put the number in context like this:

If you assume the required $600 billion annual revenue will come from around a billion highly connected people in the world, each would have to spend an average of $600 per year, either as direct payments to AI providers or indirect payments for AI integrated into services used at home or at work. It’s not an outrageous amount – a similar magnitude to cell phone bills – but it is hard to see how it could quickly be met out of existing budgets across such a large swath of the global population.

AI boosters often cite the fact that ChatGPT became the fastest-growing consumer product in history when it reached 100 million users in less than a year. (Today closer to 200 million people use it). But just over 10 million users actually pay roughly $200 per year for the product.

To reach the number Liebreich cites, another 990 million people would have to pay more than twice as much per year for AI products.

Given enough time, that seems completely plausible. But the question that causes so much panic isn’t if AI is going to do all our work for us and demand $600 per year in return by the year 2045; it’s whether or not that happens over the next few years.

Read more from Distilled

Utilities are proposing the wrong solutions

For the last two years, utilities have been arguing that the only way to meet growing demand for electricity is to build large natural gas plants. AI’s fast growth is key to their argument. They say that today we just don’t have the technology—long duration storage, advanced nuclear, and the like—to provide power to data centers that consume electricity at all hours of the day.

None of this is surprising considering utilities’ incentives.

If the AI bubble pops and we don’t need all those new gas plants, it’s not the utility that foots the bill for the bad investment decision. In many cases it’s not even the marginal consumer of that new electricity, like a new data center. Instead, the costs are spread out across all ratepayers in the form of higher bills. Meanwhile, the utility is guaranteed a return on their investment of roughly 8%. Regulated monopolies are weird like that.

Utilities don’t have to worry much about the carbon their power plants put in the atmosphere either. A sane society would see this pollution as something like garbage and make these companies pay to take their trash to the dump instead of tossing it in the street. We don’t live in such a society though. Keeping a coal plant in operation for a few extra years carries little cost for utilities and huge cost for the rest of society in the form of future climate damage.

Utilities have little incentive to consider these downside risks. But the rest of us do.

Lessons from one of the last coal plants built in America

20 years ago, Xcel, the utility that delivers power to my house in Colorado, proposed one of the last coal plants to be built in America, Comanche Unit 3.

A few years into the 21st century, it was already obvious to many that a coal plant made no sense—even without taking into account its carbon emissions. Xcel was already building wind farms with success. They also had an opportunity to cut peak power demand by investing in energy efficiency.

But the company successfully made the case to regulators that a coal plant was the cheapest and most reliable way to meet growing power demand. This proved to be a costly decision for both Coloradans and the planet.

All of the economic models that Xcel used to back up its arguments assumed that the coal plant would operate until 2070. But as the cost of renewables fell and Colorado passed policies aimed at curbing emissions, the company was forced to change their plans. The plant is now scheduled to close 40 years ahead of schedule.

Xcel will still make its full return on the $784 million plant; it’ll just do it by charging ratepayers hundreds of million of dollars over the next few decades for a mothballed coal plant instead of electricity. (Again, regulated monopolies are weird like that).

Comanche 3 hasn’t even been that good at delivering reliable electricity either. Mechanical failures took the plant out of operation for all of 2020. Despite being one of the newest fossil fuel generators in Xcel’s fleet, it’s been its least reliable.

All that downtime has pushed the actual price of electricity generation from Comanche to $66 per MWh, which is more than the company projected wind power to cost 20 years ago when it argued renewables were too expensive.

Xcel might argue that hindsight is 20/20 and they made the best decision at the time given the information. But they didn’t. Biased by perverse incentives, they pushed hard for a solution that would boost their share price, not the ones that would provide cheap, reliable, low-pollution power.

Environmental groups that pushed back on the company’s original plan largely predicted this exact scenario. They argued to regulators that any serious climate policy would doom the coal plant, which would go onto become Colorado’s single largest source of carbon emissions. That the state would pass such policies didn’t take a stretch of the imagination considering it had already passed the country’s first renewable portfolio standard.

Consumer advocates argued that higher investments in energy efficiency were more financially prudent. They could achieve the same result without the risks that Comanche posed. Others argued that Xcel’s assumptions for the cost of wind power were too high considering recent cost declines.

If regulators had listened to these arguments and pushed Xcel to build infrastructure that was resilient to uncertainty—that is, new power capacity that made sense no matter what happened over the coming decades—utility bills would be lower and our atmosphere would have millions of tons less carbon in it.

Read more from Distilled

We have better solutions than gas power plants

Today, regulators across the country face similar decisions to the one Xcel and Colorado faced 20 years ago. Do they allow utilities to build the next generation of Comanche-like fossil fuel plants—this time powered by natural gas. Or do they force utilities to build infrastructure and solutions that are more resilient to uncertainty?

Today, like 20 years ago, there are alternatives that are cleaner, cheaper, and more reliable than fossil fuels. Importantly, these solutions make sense regardless of whether or not AI continues to boom steadily or temporarily goes bust.

Take energy efficiency investments for example. As I wrote last year, between 2006 and 2021, utility energy efficiency programs cut electricity demand by 220 TWh, as much power as the entire state of Florida consumes each year. But many utilities are investing less in these programs than they were in 2019. Duke Energy, which has proposed massive gas plants across the Southeast, invests less in efficiency—and has more to gain—than most utilities in the country.

Or take virtual power plants, a fancy way to cut peak power demand. A 2023 Department of Energy report found that the country could meet the bulk of its new power demand between now and 2030 by deploying more VPPs.

Both of these investments can either make room for new data centers or reduce the need to burn fossil fuels if tech companies cut back their AI investments next year. You don’t need to be able to predict the future for them to pay off.

There’s also much we can do to speed up the deployment of renewables. We could replicate Texas’ “connect-and-manage” approach to grid interconnection; site solar and storage at retired coal plants; create super-powered energy parks; invest in large-scale transmission lines; reconductor existing transmission lines to double their capacity; and so much more.

With real ambition and smart policy, new clean energy capacity can meet even the highest AI power demand growth scenarios. And it can do it without the same stranded asset risk that fossil fuel plants carry. If massive data center demand doesn’t materialize, this new glut of power would make electricity cheaper, further accelerating the electrification of cars, buildings and factories. Clean energy, unlike fossil fuel power, is future-proof.

The physicist Niels Bohr once said, “It is difficult to make predictions, especially about the future.” He was right: None of us have a crystal ball. But we do have the ability to learn from the past and choose infrastructure that enables progress and prosperity no matter what happens.

Thanks for this Michael! It's the best reporting on the Great AI Data Center Power Freakout that I've read so far. I love that you ended with utilities using data center-driven load growth as premise for more fossil fuel infrastructure investments--no surprise there. I'm hoping that one utility taking the bold step of meeting projected demand growth from data centers with *only* efficiency/VPP/etc. investments will get other doing the same. Great broad and deep coverage here, please revisit this story often for us!

great read thanks Michael…the incentives are quite perverse from a regulated utility standpoint as you highlight multiple times